Unveiled 'PIM technology' application case at Hot Chips conference

Performance and energy efficiency improvement without system change

Preemption in DRAM and mobile market... Cooperation with global

Samsung Electronics has unveiled a large number of new concept memory semiconductors equipped with artificial intelligence (AI) engines. Following the first unveiling of 'HBM-PIM', which applied 'Processing in Memory (PIM)' technology to high-bandwidth memory semiconductors in February, they are rapidly expanding its product portfolio for DRAM and mobile applications.

PIM is a semiconductor technology that performs AI calculation inside the memory. Samsung Electronics' strategy is to shift the memory paradigm and take the lead in the ecosystem with next-generation convergence technology with PIM technology.

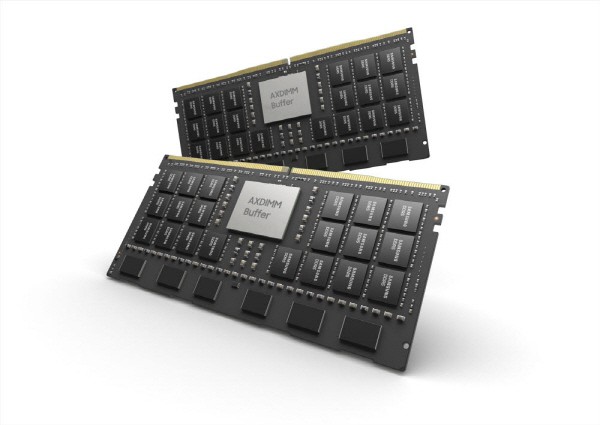

At the 'Hot Chips' conference held online on the 24th, Samsung Electronics introduced various product groups and application cases to which PIM technology is applied. PIM is a next-generation technology that adds a processor function that performs computing tasks in memory, and was first developed by Samsung Electronics. The products that Samsung Electronics introduced this time are 'AXDIMM (Acceleration DIMM)' with AI engine mounted on DRAM module and 'LPDDR5-PIM' with PIM applied to mobile DRAM.

ADXIMM is the first product to expand PIM technology from chip level to module level. Each rank, which is the operation unit of the DRAM module, is equipped with an AI engine, allowing calculations within the DRAM module. Energy efficiency can also be greatly improved by reducing data movement between the CPU and DRAM module. Another feature is that ADXIMM can be applied without changing the existing system. As a result of testing the ADXIMM by Samsung Electronics, it was confirmed that the performance doubled and the system energy efficiency increased by 40%.

Samsung Electronics' LPDDR5-PIM is a product that applies PIM technology to mobile DRAM. It is possible to maximize the performance of 'on-device AI', which performs AI calculation on the mobile phones itself, without the need to connect to a data center. When operating voice recognition, translation, and chatbot applications, it was confirmed that the performance improved more than twice and energy was reduced by 60%.

Samsung Electronics also unveiled actual system application cases and performance test results of HBM-PIM at the conference in February. As a result of installing HBM-PIM in the AI accelerator system commercialized by Xilinx, a Field-Programmable Gate Array (FPGA) company, it was confirmed that performance was 2.5 times better and system energy was reduced by 60% compared to the existing 2nd generation high bandwidth memory (HBM2).

Samsung Electronics plans to diversify its PIM product portfolio and secure leadership in the next-generation memory semiconductor ecosystem by cooperating with various global companies. It is their strategy to change the paradigm of the memory market through AI and memory convergence.

Namsung Kim, executive director of DRAM development department of Samsung Electronics’ memory division, said, “HBM-PIM is the industry’s first customized memory solution in the AI field, and it showed so much potential for commercial success as it has already been installed in customers’ AI accelerators. In the future, through the standardization process, it will be expanded to next-generation supercomputing and AI HBM3, AI on-device mobile memory, and DRAM modules for data centers.”

By Staff Reporter Dongjun Kwon (djkwon@etnews.com)